AI: Beyond Algorithms (Cover Story- March, 2025)

Uncertainty has always been an intrinsic part of human existence. No matter how advanced we become as a species, whether through our intellectual endeavors or the marvels of technology, the chaotic nature of life persists. The world, as much as we may wish to control it, often defies our attempts to bring order to it. While many today place their hopes in artificial intelligence (AI) to offer clarity in an increasingly complex world, two new books shed light on why this expectation may be misguided. Instead of hoping that AI will tame the chaos around us, they suggest that we might need to embrace the uncertainties that shape our lives.

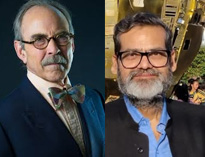

In their books, David Spiegelhalter and Neil D. Lawrence, both professors at the University of Cambridge, explore the fundamental nature of uncertainty and its persistent presence in our daily lives. Spiegelhalter, a renowned statistician, and Lawrence, a specialist in machine learning, draw on their diverse professional experiences to examine how humanity has historically sought to measure, manage, and cope with uncertainty. Their analyses provide a deep dive into how we perceive risk, how trust is built or eroded, and the role of AI in shaping the modern world.

The Enduring Nature of Uncertainty

The English poet George Meredith encapsulated the frustration of uncertainty more than 150 years ago when he wrote, "What a dusty answer gets the soul when hot for certainties in this our life!" This sentiment is at the heart of both Spiegelhalter and Lawrence’s work. The two authors recognize that uncertainty is not just a byproduct of human ignorance or lack of control, but rather a fundamental aspect of existence. Despite our advances in science and technology, uncertainty remains an unavoidable constant.

In his book, Spiegelhalter discusses the historical approaches humanity has taken to measure uncertainty. He explores how different statistical methods, such as frequentist approaches and Bayesian analysis, have been developed to provide a sense of predictability in an unpredictable world. Frequentist methods are useful when risks can be physically defined, such as the likelihood of a coin flip. Bayesian analysis, on the other hand, incorporates subjective risk estimates and is often more adaptable to real-world scenarios, where uncertainty is less tangible.

Neil D. Lawrence brings a different perspective to the discussion, blending his background in machine learning and engineering to explore how uncertainty shapes technological progress. Before his academic career, Lawrence worked as a well-logging engineer on a North Sea drilling platform, where he witnessed firsthand how unpredictable events could disrupt even the most well-planned operations. His experiences in both the corporate and academic worlds give him a unique lens through which to analyze how modern systems, including AI, attempt to grapple with uncertainty.

Trust in the Age of Uncertainty

A central theme that unites both Spiegelhalter and Lawrence's works is the idea of trust. In a world full of uncertainties, trust becomes a critical currency. Without trust, societies cannot function smoothly. Whether it’s trust in governments, institutions, or individuals, this intangible but essential element holds societies together.

Spiegelhalter draws on the work of philosopher Onora O’Neill, particularly her concept of "intelligent transparency." According to O'Neill, for policymakers to foster trust in the face of uncertainty, they must be transparent in a meaningful way. This means not just sharing information but presenting it in a way that allows the public to understand and engage with it. Spiegelhalter argues that this kind of transparency is essential for building trust, especially in an era where misinformation can spread rapidly.

Lawrence also touches on the importance of trust, particularly in the context of AI. Since the rise of generative AI models like ChatGPT, there has been much debate about whether these systems can be trusted. These models process vast amounts of human-generated data and produce responses that often appear thoughtful and accurate. But Lawrence questions whether this trust is warranted. He invokes O'Neill’s argument that trust is not intrinsic to systems, but rather must be earned by the people who operate them. If AI models are divorced from human oversight, how can they be trusted to make decisions that affect our lives?

The Rise of Generative AI

The launch of ChatGPT in late 2022 marked a turning point in public discourse around AI. Generative models like ChatGPT have become a focal point for debates about the future of technology and its role in society. These models generate text and visual responses based on massive amounts of data, leading many to believe that AI could bring order to the chaos of modern life.

However, both Spiegelhalter and Lawrence caution against placing too much faith in these systems. AI models, they argue, are tools created by humans, and like any tool, they have limitations. They are designed to produce plausible-sounding responses, but they do not have a true understanding of the world. This distinction is critical because it means that AI cannot offer the kind of certainty that many hope it will. If anything, relying too heavily on AI could lead to greater uncertainty, as these models are not infallible and are prone to producing misleading or incorrect outputs.

Laplace’s Demon and the Limits of Predictability

At the core of both books is a discussion of the famous thought experiment known as “Laplace’s demon.” In 1814, French philosopher Pierre-Simon Laplace imagined a demon with perfect knowledge of the universe’s present state, including all forces of nature and the positions of every atom. With this knowledge, the demon could predict the future with absolute certainty, rendering the concept of chance obsolete.

Laplace’s demon represents a deterministic view of the universe, where everything follows a predictable path. However, both Spiegelhalter and Lawrence argue that our world is far from deterministic. Despite our best efforts to understand and control our environment, uncertainty persists. Lawrence refers to this reality as “Laplace’s gremlin,” a nod to the fact that unpredictability remains a defining feature of human life. No matter how sophisticated our tools become, there will always be factors beyond our control—whether it’s blind chance, luck, or ignorance—that shape the course of events.

Embracing Uncertainty

Ultimately, both authors come to a similar conclusion: uncertainty is here to stay. While we can develop better tools to manage risk and navigate complexity, we cannot eliminate uncertainty entirely. This realization forces us to reconsider our relationship with uncertainty. Rather than trying to banish it, we must learn to live with it. Trust, transparency, and human oversight will remain crucial as we move forward into an increasingly unpredictable world.

In a time when many are looking to technology to bring clarity and control, Spiegelhalter and Lawrence offer a sobering reminder that uncertainty is not something we can ever fully escape. It is an inherent part of the human condition, and learning to navigate it—rather than conquer it—may be the key to thriving in the 21st century.

Taming Uncertainty: Navigating the Unpredictable with Spiegelhalter's Insights

Uncertainty, an intrinsic aspect of human existence, has captivated scholars, scientists, and philosophers for centuries. It is not simply an abstract concept but a real, tangible force that impacts our daily lives and decisions. In "The Art of Uncertainty," David Spiegelhalter explores humanity's ongoing struggle to understand, manage, and make sense of the unpredictable world around us. Through the lens of probability theory, he presents an engaging analysis of how we grapple with uncertainty, highlighting its profound effects on our perceptions, choices, and models of reality. Spiegelhalter's work sheds light on how probabilities are intertwined with both randomness and our own ignorance, offering readers a deeper understanding of the complex forces that shape our futures.

Personalizing Probability and the Nature of Uncertainty

One of Spiegelhalter's core arguments is that probability is not an external, objective force that exists independently of human observation. Instead, it is highly personal and shaped by our experiences, knowledge, and biases. This perspective challenges the traditional view that probabilities are purely mathematical constructs waiting to be discovered. Rather, they represent our relationship with uncertainty, reflecting the degree to which we are conscious of our own ignorance.

To illustrate this point, Spiegelhalter introduces a classic example: the simple act of flipping a coin. In this scenario, he explains that two types of uncertainty are at play. The first is aleatory uncertainty, which refers to the inherent randomness of an event like a coin toss. The second is epistemic uncertainty, which arises from a lack of knowledge about an event that has already occurred (such as whether the coin has landed on heads or tails). While we can model the probability of a coin landing on heads or tails (aleatory), we cannot know the outcome of a particular toss unless we directly observe it (epistemic).

Spiegelhalter uses this example as a gateway to understanding how we can use statistical analysis to reduce uncertainty in more complex scenarios. For instance, when rolling a six-sided die, we know that each side has an equal chance of landing face-up. This straightforward frequentist approach, based on past outcomes, allows us to narrow the range of possible future outcomes. However, the situation becomes significantly more challenging when the outcome cannot be clearly defined by physical constraints or when human behavior is involved.

The Limitations of Models and the Role of Game Theory

Spiegelhalter emphasizes that while models can help us navigate uncertainty, they are not perfect representations of reality. A model, like a map, is a useful abstraction that simplifies the world, but it can never capture all of its complexities. This insight is crucial when dealing with human behavior, which is often difficult to predict with accuracy.

For example, game theory has added rigor to our understanding of strategic decision-making, particularly in situations where individuals must respond not only to others' actions but also to their expectations of those actions. Yet, as financier George Soros demonstrated, reflexive behavior—where individuals' actions are influenced by their expectations of others' actions—creates a recursive loop that pushes the boundaries of our predictive capacity. This feedback loop adds an additional layer of complexity, making it even harder to model and predict human decisions.

Spiegelhalter reminds us that all models, no matter how sophisticated, are inherently limited. They are approximations of reality, not reality itself. This acknowledgment of the imperfection of models is a central theme in his work, highlighting the need for flexibility, skepticism, and continuous reassessment when dealing with uncertainty.

The Power of Bayesian Analysis

One of the most powerful tools in probability theory, according to Spiegelhalter, is Bayes' Theorem. Formulated by the English minister Thomas Bayes in the 18th century, this theorem revolutionized the way we think about probabilities. At its core, Bayes' Theorem allows us to update our beliefs in light of new evidence. It relates the prior probability (our initial assessment of the likelihood of an outcome) to the posterior probability (the updated likelihood after considering new evidence).

Spiegelhalter demonstrates the practical applications of Bayes' Theorem through thought-provoking examples. For instance, why might more vaccinated individuals die of COVID-19 than unvaccinated individuals? At first glance, this might seem counterintuitive. But through Bayesian reasoning, we can account for the fact that, in a population where most people are vaccinated, there will be more vaccinated individuals overall, and hence, a greater absolute number of deaths in this group, even though the risk of death for vaccinated individuals is lower.

Another example involves police imaging software that flags potential threats. Using Bayesian analysis, we can assess the likelihood that a person flagged by the software is actually a threat, considering factors like the overall accuracy of the software and the prevalence of threats in the population.

These examples underscore a key point: probabilities are not always a function of physical properties, like those of a coin or a die. Often, they are shaped by subjective expectations and interpretations of evidence. Bayes' Theorem provides a framework for incorporating this subjectivity into our analysis, allowing us to refine our understanding of uncertainty as new information becomes available.

Cromwell’s Rule: Embracing Uncertainty

Despite the power of probabilistic reasoning, Spiegelhalter acknowledges that our ability to tame uncertainty is inherently limited. He draws attention to Cromwell’s Rule, which cautions against assigning probabilities of zero or one to any event unless it can be shown to be logically impossible or certain. Named after Oliver Cromwell’s 1650 plea to the General Assembly of the Church of Scotland, the rule serves as a reminder to remain open to the possibility of error and reassessment, especially in complex, real-world situations.

In practical terms, Cromwell’s Rule warns us not to be overly confident in our predictions. Even when probabilities seem low, there is always a chance that the unexpected will occur. Spiegelhalter uses this rule to highlight the dangers of absolute certainty in an uncertain world. Outside the realm of formal logic, where outcomes can be clearly defined, there is always room for doubt, ambiguity, and surprise.

Embracing the Uncertainty of Life

In "The Art of Uncertainty," David Spiegelhalter offers a compelling exploration of how we perceive, model, and navigate the unpredictability of life. By blending statistical analysis with philosophical insights, he invites readers to embrace uncertainty as an inescapable part of the human condition. Rather than seeking to eliminate uncertainty, we must learn to live with it, using tools like Bayesian analysis and game theory to make more informed decisions. At the same time, we must remain humble in the face of uncertainty, acknowledging the limitations of our models and our knowledge.

Ultimately, Spiegelhalter’s work serves as a reminder that uncertainty is not something to be feared but understood. In a world where unpredictability is the norm, the art of uncertainty lies in our ability to adapt, reassess, and navigate the complexities of life with curiosity and openness.

The Trusting Animal: Expanding the Concept of Human Intelligence in a World of AI

In his insightful exploration of human intelligence and artificial intelligence, Spiegelhalter's reflections in The Art of Uncertainty present a nuanced argument against radical uncertainty. While Frank Knight and John Maynard Keynes famously argued that there are circumstances where “we just don’t know,” Spiegelhalter offers a different perspective on this human relationship with unpredictability. He rejects the notion that we are entirely blind to the future, suggesting instead that uncertainty exists as something manageable, though not entirely controllable. By emphasizing a personal connection to uncertainty, Spiegelhalter reaffirms the limits of formal analysis but does not abandon it entirely. His argument is both philosophical and practical, revealing how humans approach the unknown and how subjective probabilities shape our understanding of events.

Spiegelhalter's “personal conclusion” illuminates the tension between analytical rigor and the need for adaptive thinking in the face of deeper, ontological uncertainty. This kind of uncertainty transcends the simple unknown and touches upon the inherent unpredictability of existence itself, which is governed by the laws of nature. The second law of thermodynamics, for instance, illustrates how order inevitably gives way to randomness in a closed system. Spiegelhalter accepts this entropy as a part of life and insists that rather than relying solely on formal models, humans must develop strategies that can adapt to both foreseeable and unforeseeable outcomes. In this way, uncertainty becomes not something to fear but a challenge that humanity has been dealing with throughout history.

This relationship between uncertainty and adaptability is central to Lawrence’s work in The Atomic Human, where he examines the human essence in the context of technological advancement, particularly in the field of artificial intelligence (AI). Lawrence draws a comparison between Spiegelhalter’s analysis of human intelligence and the adaptability required to thrive in an uncertain world. He introduces the idea of the “atomic human,” a metaphorical concept highlighting human intelligence as something that emerges from our ability to adapt to unpredictable conditions. Lawrence’s analysis bridges the gap between AI and human cognition, raising a core question that permeates the history of technology: can machines ever truly replicate human intelligence?

The Limits of Formal Analysis and the Power of Adaptability

One of the most compelling examples of this adaptability is seen in General Dwight Eisenhower’s decision-making process on the eve of D-Day during World War II. As the commander of Allied forces, Eisenhower had access to a vast amount of intelligence, including the critical decrypts of German ciphers cracked by Alan Turing and his team. However, even with all the available information, Eisenhower had to make a judgment call based on uncertainty. In making this decision, he embodied the “atomic human” by acknowledging the limits of formal analysis and embracing the unknown with personal conviction. Eisenhower’s reflective act of writing a memorandum accepting full responsibility in the event of failure serves as a powerful testament to human intelligence's capacity to navigate uncertainty through trust and decision-making, rather than cold calculation alone.

This episode resonates deeply with Lawrence’s broader thesis about the role of intelligence in human history. He argues that human cognitive power evolved through natural selection to deal with the unpredictability inherent in the environment. Over time, humans developed the ability to communicate complex narratives, sharing knowledge and experiences to build trust and cooperation within societies. This capacity for communication and narrative construction is what sets humans apart from machines. It is an evolutionary trait that allows us to form “theories of mind,” meaning we are capable of modeling other people’s thoughts and intentions—something that artificial intelligence, at least in its current form, cannot replicate.

AI and the Problem of Reflexive Intelligence

Lawrence contrasts this adaptability with the inherent limitations of artificial intelligence, particularly large language models (LLMs). Unlike human intelligence, which thrives on slow, deliberate communication and narrative-building, LLMs are fundamentally probabilistic prediction machines. They process vast amounts of data to generate outputs that mimic human language, but they lack true understanding or awareness of their own limitations. This creates what Lawrence refers to as “the great AI fallacy”—the erroneous belief that AI systems have achieved a form of intelligence that can rival human understanding.

The fallacy, as Lawrence explains, is rooted in a misunderstanding of what AI is actually doing. These systems do not engage in causal reasoning or reflect on the meaning of their outputs. Instead, they rely on statistical associations within the data they have been trained on, producing predictions that may seem accurate but lack the depth of human reasoning. Judea Pearl, a leading expert on causality, has highlighted this limitation by explaining that machine learning models excel at estimating probability distributions but fail to move beyond those estimates to understand cause-and-effect relationships. In other words, AI systems are excellent at pattern recognition but are far from achieving true intelligence as humans understand it.

The Atomic Human and the Future of AI

In Lawrence’s view, the future of AI may involve hybrid systems that combine the strengths of both human and machine intelligence. He envisions a “human-analogue machine” (HAM) that could act as an extension of human cognitive abilities. Such a system would not replace human intelligence but would augment it, allowing people to navigate complex, uncertain environments more effectively. However, Lawrence is quick to caution against the temptation to view AI as a replacement for human intelligence. He argues that humanity’s unique strength lies in its vulnerabilities—our ability to second-guess ourselves, to build trust through communication, and to adapt to changing circumstances.

This caution is further emphasized in the context of technological history. As Lawrence traces the development of computing, from early cybernetic systems to today’s neural networks and machine learning algorithms, he underscores the fact that each technological breakthrough has been driven by human ingenuity. Yet, despite these advances, the human essence remains irreplaceable. Machines may be powerful tools, but they are ultimately limited by their inability to adapt to the unknown in the way that humans can. As Spiegelhalter and Lawrence both acknowledge, uncertainty is an inescapable part of life, and our ability to cope with it depends on more than just formal analysis.

Taming Uncertainty: A Broader Perspective

At the heart of both Spiegelhalter’s and Lawrence’s analyses is the recognition that uncertainty is not something that can be fully tamed. Instead, it must be embraced as a fundamental aspect of human existence. This perspective challenges the notion that AI will ever achieve true intelligence, as it cannot replicate the adaptability, trust, and self-awareness that define human cognition. While AI can process vast amounts of data and make predictions based on that data, it lacks the ability to reflect on its own limitations or to build narratives that foster trust and cooperation.

In this sense, the “atomic human” concept serves as a reminder of what makes human intelligence unique. It is not just our ability to calculate probabilities or analyze data that sets us apart from machines; it is our capacity for reflection, communication, and adaptability in the face of uncertainty. As we continue to develop AI technologies, it is crucial that we remember these strengths and avoid falling into the trap of believing that machines can fully replace human intelligence.

Embracing Uncertainty

Both Spiegelhalter’s The Art of Uncertainty and Lawrence’s The Atomic Human offer valuable insights into the nature of human intelligence and its relationship with uncertainty. While technological advancements in AI have created powerful tools for analyzing data and making predictions, these systems are ultimately limited by their inability to replicate the unique qualities of human cognition. As we move forward in an increasingly uncertain world, it is essential to recognize that our greatest strength lies not in our ability to eliminate uncertainty, but in our capacity to adapt, communicate, and build trust in the face of the unknown.

DATA OR DRIVEL? The Limits of Data, Uncertainty, and Human Decision-Making

In an era where data is often regarded as the ultimate tool for decision-making, there exists a growing tension between reliance on data and the inherent limitations of what data alone can achieve. Both Lawrence and Spiegelhalter celebrate the human capacity to process data in ways that allow us to make informed decisions. Yet, they acknowledge that data, devoid of context, is essentially meaningless. The crux of the matter lies in how we interpret and give meaning to data. In the absence of context and the inherent uncertainties of the world, data-driven decisions can falter, especially in unpredictable scenarios.

AI and Bias in Criminal Justice

The increasing use of artificial intelligence in various sectors, particularly in the U.S. criminal justice system, offers a stark example of how data can mislead. AI systems are being used to recommend criminal sentences and evaluate parole applications. On the surface, this seems like a logical progression—using data to ensure fairness and consistency. However, these AI systems reflect the biases and prejudices embedded in the datasets they were trained on. As a result, rather than mitigating inequality, they can reinforce existing biases. For instance, data about previous sentencing trends in certain demographics can perpetuate unfair sentencing in the future, exacerbating social inequalities. Without a conscious effort to address these biases, data-driven AI systems risk being just as flawed as the human decision-makers they aim to replace.

The underlying issue is not merely technical but philosophical—can we trust the data we use? This question leads us to a deeper challenge: ontological uncertainty.

The Problem of Ontological Uncertainty

Spiegelhalter introduces the concept of ontological uncertainty—the idea that we cannot always predict or even list all the possible future states of the world. This uncertainty is not merely statistical but fundamental to the nature of reality. Human intelligence has evolved over millions of years in response to a world filled with surprises, upheavals, and unpredictable changes. Yet, in today’s data-driven society, we are increasingly relying on the assumption that the processes generating the data we observe will remain consistent over time. But what if they don’t? Can we really trust data when the conditions that generated it may change unpredictably?

This concern is not new. Economist Paul Davidson, in his 2015 book Post Keynesian Theory and Policy, pointed out a critical flaw in mainstream economic thinking: the belief that past data can be used to make statistically sound forecasts about the future. Davidson questioned the assumption that economic systems are governed by stable, ergodic processes (i.e., processes that remain consistent over time). In reality, the economy is more like a chaotic system, where future outcomes cannot always be predicted based on past data.

To illustrate this, imagine a young financial analyst working in 1913 at a French bank. This analyst is tasked with forecasting Russian bond prices for the next five years. Given that France was a major source of capital for Czarist Russia, the analyst had access to plenty of historical data. The data might have reflected Russia’s defeat in the 1905 war, a popular uprising, and gradual industrialization. But could this data have predicted the complete collapse of the Russian Empire by 1918? The Bolshevik Revolution led to the repudiation of all Czarist bonds, rendering them worthless. This example underscores how past data, no matter how rich or detailed, cannot always predict disruptive events.

The Financial Crisis and the Illusion of Control

The 2008 global financial crisis serves as another example of how relying on past data can create a false sense of security. Before the crisis, economists and financial institutions believed that they had developed sophisticated models to manage risk. They assumed that uncertainty could be controlled through strategies like hedging and increasing capital reserves. However, when the housing bubble burst and the crisis unfolded, it became clear that these models were not prepared for the magnitude of the collapse. The assumption that financial markets behaved according to predictable patterns was shattered. In response, regulators introduced measures to address the failings exposed by the crisis, but these measures were backward-looking, designed to prevent a repeat of the 2008 crisis, not to foresee the next one.

As Spiegelhalter observes, "our imagination operates in tandem with the world around it and relies on that world to provide the consistency it needs." But the world does not remain consistent—it is constantly shaped by disruptions, regime changes, and revolutions. History, as Lawrence points out, is marked by unpredictability, and this unpredictability challenges the very foundation of data-driven decision-making.

Risk, Uncertainty, and Ignorance: Zeckhauser’s Model

Economist Richard Zeckhauser offers a framework for understanding different levels of knowledge about the state of the world, and how these correspond to different decision-making environments. Zeckhauser distinguishes between three domains: risk, uncertainty, and ignorance.

In situations of risk, both the possible outcomes and their probabilities are known. This is the domain where statistical models and data can be most effective. For example, an investor choosing between two stocks can use historical data to assess the likelihood of different returns.

In situations of uncertainty, the possible outcomes are known, but their probabilities are not. For example, an entrepreneur launching a new product might know that it could either succeed or fail but has no way of knowing the exact probabilities of each outcome.

Finally, ignorance refers to situations where even the possible outcomes are unknown. This is the most challenging domain because decision-makers must make choices without knowing all the potential risks. Ignorance often arises in scenarios involving new technologies or unprecedented events. In these cases, decisions are based more on conjecture and deduction than on hard data.

Zeckhauser’s model highlights a key limitation of data-driven decision-making. While data can help us navigate situations of risk, it is less useful in situations of uncertainty and ignorance. In these cases, we must rely on judgment, intuition, and imagination—qualities that AI systems, for all their power, still lack.

The Self-Fulfilling Nature of Uncertainty

Spiegelhalter acknowledges that sometimes, "we cannot conceptualize all the possibilities" and "we may just have to admit we don’t know." This admission is crucial because it recognizes that uncertainty is not just an external challenge but also a self-fulfilling one. In his famous "beauty contest" metaphor, John Maynard Keynes described how people’s expectations about the future are shaped by what they believe others will do. In such situations, uncertainty can create feedback loops, where everyone is trying to predict the behavior of everyone else, leading to herding behavior and market bubbles.

This dynamic is particularly evident in financial markets, where uncertainty about future prices can lead to speculative bubbles. Investors, unsure of the true value of an asset, may base their decisions on what they think others believe, rather than on objective data. As more investors pile into the market, prices rise, reinforcing the belief that the asset is valuable. But when the bubble bursts, the same feedback loop drives prices down, as everyone rushes to sell.

Conclusion: The Limits of Data and the Need for Judgment

In the end, the message of Lawrence and Spiegelhalter is clear: data is a valuable tool, but it is not a panacea. The world is too complex, too uncertain, and too unpredictable to be fully captured by data alone. We must recognize the limits of our models and be humble in the face of uncertainty. In situations of risk, data can guide us. But in situations of uncertainty and ignorance, we must rely on human judgment, creativity, and adaptability.

This does not mean that we should abandon data-driven decision-making. On the contrary, data can provide valuable insights and help us navigate many of the challenges we face. But we must always be aware of its limitations and resist the temptation to over-rely on it. As Spiegelhalter reminds us, "sometimes we may just have to admit we don’t know." In those moments, it is our human capacity for reflection, imagination, and collaboration that will guide us through the unknown.

This article draws on insights from William H. Janeway’s piece, In AI We Trust, with additional input provided by senior journalist and editor Sanjay Srivastava to offer a broader understanding. With due respect, we are utilizing these insights as Janeway raises significant concerns about the potential impact of AI on human values. We share his concerns and aim to expand upon them further. William H. Janeway is a distinguished affiliated professor of economics at the University of Cambridge and the author of Doing Capitalism in the Innovation Economy (Cambridge University Press, 2018).